If you’re interested in cascading parameters and Power BI custom connectors is an option for your project, you should give it a … More

Author: Igor Cotruta

Exposing #shared in Azure Analysis Services

While I enjoy that now I can write PowerQuery expressions everywhere in Analysis Services, the fact that I don’t know … More

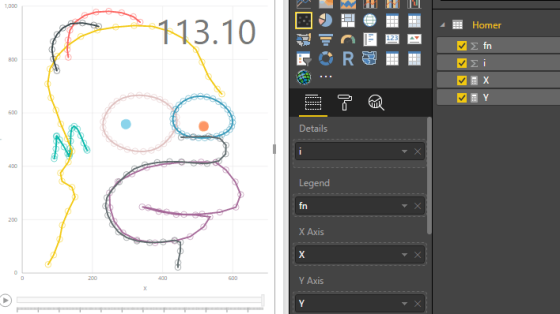

Drawing Homer Simpson with DAX trigonometric functions

Recently I started exploring the limits of standard charts in Power BI and ended up drawing all sorts of mathematical … More

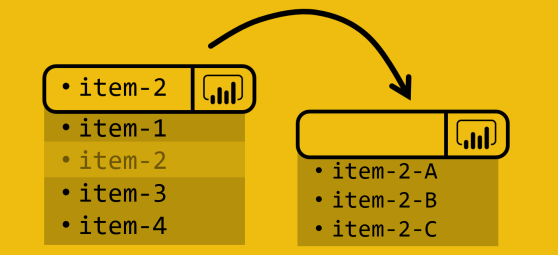

Navigating over 600+ M funcitons

Whenever I’m faced with a data mashup problem in Power BI, I try to check if it can be resolved … More

Exporting Power Query tables to SQL Server

Detailing few ways of sending millions of valuable records from a Power Query table to SQL Server

4 ways to get USERNAME in Power Query

Regardless of your requirements, providing some user context during slicing of data or a filtering mechanism for data querying, having a function like USERNAME() … More

Extracting Power Queries

Yet another method of retrieving Power Queries from Power BI workbooks

R, Power Query and msmdsrv.exe port number

Using R.Execute() in #Power Query to get the connection details to the $Embedded$ tabular model

R.Execute() the Swiss Army knife of Power Query

The R ways in #PowerQuery. How R.Execute() can be used for other non-statistical related tasks #PowerBI

Power Query matrix multiplication

How to do matrix multiplication in Power Query

How much data can fit in Power BI Desktop

Going through the steps of fitting 4,294,967,294 rows of data or 1,999,999,981 unique values and 15,986 columns into a Data Model table while using 55GB of RAM.